It’s your business rules that allow you to turn all the building blocks provided by Click Commerce Extranet into your own solution. Business rules manifest themselves in the names of the workflow states you use, how a project moves through it’s lifecycle and the actions available to the user at each step along the way, how you define your users, security policies, the information you collect, and criteria against which that information is verified. All of these configuration choices, supported by the Click Commerce Extranet platform, are what makes your site uniquely yours. Verifying information according to your institutional requirements involves the implementation of Validation Rules. This post will present one approach to their implementation.

Consider this validation rule:

In your IACUC solution, if animals are to be sourced through donation, you require that there be a description of the quarantine procedures that will be used.

Sounds like a reasonable requirement, right? So, where would you enforce that rule? A key advantage to the Click Commerce Extranet platform is it’s flexibility but sometimes determining the best approach requires that you weigh the pros and cons. This rule is an example of a conditionally required field. Here are a few of the most common approaches to implementing this type of rule:

- SmartForm branching

You can enforce the rule by separating the selection of animal source and the follow-up questions onto different SmartForm steps and use a combination of SmartForm branching and required fields. This is by far the easiest implementation because it allows you to take advantage of the built-in required fields check and can be accomplished without any additional scripting. It does however, require that the questions be separated into multiple SmartForm steps. This isn’t a big deal if there is already an additional steps where the follow-up question could be placed but this may not always be the case. - Conditional Validation Logic

With a bit more work you can keep the questions on a single view and implement conditional validation logic in a script. This allows you to keep the fields together but the follow-up question will be visible to the user in all cases. You will need to include instructional text into the form to let the user know that the second question is required if the initial question is answered in a particular way. - Conditional Validation Logic with Dynamic Hide/Show

With yet even more work, you could dynamically show the relevant dependant questions only when the user is required to answer them. They would otherwise be hidden. This technique is the subject of an upcoming post but it’s important to understand that the enforcement of the validation rule is still accomplished through custom script.

By far, the easiest implementation is option1 because the Extranet application will perform all the validation checks for you. But what if your rule doesn’t fit within a simple required field check or your users won't let you separate the questions onto different SmartForm steps? In these cases, you will have to implement some logic. Knowing that, you are still faced with the decision of where to put it. Again, there are options:

- Add custom logic in a View Validation Script

This is the preferred approach as the configuration interfaces are exposed via the standard Web-based configuration tools. - Override the Project.setViewData() method

This technique has been replaced with the View Validation Script but before that script hook was available was the best place to add custom logic. It is no longer the recommended approach. - Override the Project.validate() method

This method is called when validating the entire project as would happen when the user clicks on Hide/Show errors in the SmartForm or executes an activity where the "validate Before Execution" option is set. It is not invoked, however, when a single SmartForm step is saved so is really only a good place for Project level validation.

Option 1 is the most common approach and is much preferred over option 2. Both approaches will allow you to enforce validation rules whenever a view (or SmartForm step) is saved. This is what I call “view validation.” All view validation rules must be met before the information in the view can be considered valid. This means that a user cannot save changes or continue through the SmartForm until all rules for the current step are met. This is applicable to most needs but not all. Let’s consider another rule that also must be enforced:

In order for a protocol to be submitted for review, the PI and Staff must have all met their training requirements.

Enforcing this rule when posting a view or SmartForm step would be overly restrictive. The PI and Staff should be able to complete the forms even if their training is incomplete. The Rule is that the PI cannot submit the protocol for review until all have met the training requirements, so a View validation won’t work. What is needed is Project level validation which can be accomplished by overriding the Project.validate() method.

So this means that some rules are implemented in a View Validation script using the web-based configuration tools, while other rules are implemented using Entity Manager. This approach definitely works and is seen in a large number of sites. The downside is that code is maintained in different places and script is required for all rules.

In addition, all the scripting approaches It doesn’t take into consideration that many rules follow common patterns. For example,

- If field A has value X, then a response for field B is required, or

- Field A must match a specific pattern such as minimum or maximum length

What if these patterns could be formalized into a standard way of defining a validation rule? What if all rules were defined in the same way and in the same place? Would that make implementation, testing and maintenance easier? I certainly think so.

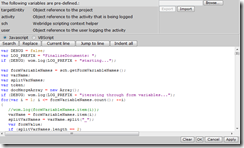

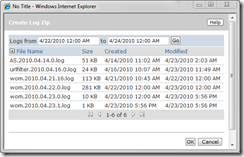

Introducing the ProjectValidationRule implemenation

ProjectValidationRule is a Selection Custom Data Type whose sole purpose is to serve as a central place to define and execute your site’s validation rules. Some of you may have previous experience with a CDT named “SYS_Validation Rules” and any similarities you see are not a coincidence. That type provided the seeds from which this new implementation was grown. It allows for rules following common patterns to be defined without authoring any additional script and provides the flexibility to define whether the rule is to be enforced at a View or Project Level. As an added bonus, you can also define activity specific rules.

Download the ProjectValidationRule package for specific implementation details. This approach is still evolving and it’s proven very effective so far but there is always room for improvement so feedback is always appreciated.

Cheers!