Extranet 5.6 and 5.7 enabled greater access to the data stored in the underlying database by exposing more data in standard SQL tables and automatically creating SQL Views that simplify the task of authoring SQL Queries to extract information. All of this is intended to support the needs for advanced reporting or data extraction. In other words, they are targeted at getting data out of Extranet. Thinking about how best to get data into the Extranet database is a completely different matter, however.

Thursday, December 23, 2010

Wednesday, December 22, 2010

An Approach to Parallel Amendments

Goals

This post outlines steps to support an implementation for parallel amendments. The described approach is guided by the following principles:

Performance

Only create data that is necessary to support the functional requirements and avoid long running transactions. New amendments should not need to cause the creation of a complete copy of the previously approved protocol.

Ease of Review

Provide features that improve the overall experience of the reviewer.

Efficiency

Avoid the review bottleneck by allowing for amendments of different types to be in process at any given time.

Ease of Maintenance

While supporting the first two principles, the design should minimize redundancy of configuration

This is based upon ideas that have been floating in my head for a while now and largely driven by my desire to effectively support parallel amendments and dramatically reduce the dependence upon clone. We’re in the middle of the first real implementation of this approach and so far so good.

Wednesday, November 3, 2010

ProjectValidationRule Version 2

Since initially making the Project Validation Rules available for download, I’ve seen it successfully incorporated into several sites. I’m now happy to post version 2 of this package which extends the core features originally described in the original post on December 4th, 2009.

Friday, October 15, 2010

Developer Quick Tip: An URL that’s easier to share

Today, I thought I’d share a little known trick to shorten and simplify the URLs you share with others. I’m sure you’re all familiar with the vast majority of URLs to your site that look something like this:

LayoutInitial is a primary driver of most content pages, including personal pages, and project workspaces. The way it knows which project workspace, personal page, or content page to display is by looking at the value passed via the Container query string parameter. While the computer has no problem reading this value, it’s not so easy for us humans to share this with each other. But, wait! There’s a better way…

Monday, October 11, 2010

2010 AALAS Conference

It’s hard to believe a year has gone by since my last visit to the annual AALAS conference but there’s no avoiding the truth as I’m now at 32,000 feet on my way to Atlanta for this year’s edition of the show. Last year was my first time and it was interesting to see the wide variety of exhibitors showing their wares big and small. On the large end, I saw massive automated feeding and cleaning systems and on the small end I was amazed at the grain-of-rice size RFID chips designed to be injected under the skin of individual mice.

Saturday, September 25, 2010

Developer Quick Tip: sch.appendHTML() as a debugging tool

As I’m sure you’ve discovered by now, the Extranet Framework provides a rich set of intrinsic objects and APIs that you can use to truly customize your site. One method I want to highlight today is sch.appendHTML().

Friday, September 3, 2010

A way to think about Security Policies

Anyone who has done any configuration within Click Commerce Extranet has most likely had to configure the security policies. These policies are an essential part of any fully configured site. It’s those policies that define who can access project information, perform Activities, interact with Reviewer Notes, and view information in the project’s audit trail. Proper configuration of Security Policies is critical to your site’s success for two primary reasons: Making sure the right people can do the right things at the right time and avoiding poor performance.

Even if you’ve configured security policies before, this post should be useful as it describes a way of thinking about how best to configure your policies.

Wednesday, July 28, 2010

Phone, Web Conferencing and Email are all inferior

As I sit here in the Detroit airport facing a 4 hour delay and scheduled arrival back in Portland at 2AM PST, it would be easy for me to rail against the need for face to face visits, but I won’t. It’s incredibly valuable to get together with the team who is ultimately responsible for delivering solutions and countless updates of those solutions to a user base that is always asking for more. In fact, my visit with just such a team this week at University of Michigan only serves to reinforce my belief that there is no replacement for the occasional face-to-face get together. Phone, Web Conferencing, and Email are all inferior to physical presence.

Tuesday, July 20, 2010

Context is king when it comes to security

This friendly little reminder comes via Scott Mann from University of South Florida:

I found something in our site that I wanted to pass along for the benefit of your developers (yes, we’re still finding stuff). Today’s issue is that we were getting email failures for people we knew had access to projects. They were failing on the Reschedule activity which is supposed to notify the PI and reviewers that the project has been moved to another meeting. Unfortunately, the notifications were configured on the activity, whose read policy properly blocks the PI since the form displays the names of reviewers. Since the notification’s context is the activity, and the recipient does not have access to the activity, the notification fails. It’s an easy mistake to make, but a good lesson for understanding the impact of activity security and the importance of context.

First let me say thanks to Scott for his contribution. It’s nice when others write my blog posts for me. ;-) This is a first but hopefully not the last (hint, hint, nudge, nudge). Scott is absolutely right and makes a point that I think deserves further elaboration.

Tuesday, July 13, 2010

Tips for managing WebrCommon files

Today’s post is short and sweet and I hope will save you a headache or two down the road. It’s related to managing the contents of your WebrCommon directory.

Tip #1

As most of you have already upgraded to Extranet 5.6 or are actively working your way there, it’s a good opportunity to emphasize that the best way to add or update your WebrCommon files is through Site Designer. This is especially true with Extranet 5.6 because, as of that release, the Click Commerce Extranet framework actually manages two locations for the WebrCommon files. In the past you were able to get away with simply dropping files directly into appropriate directory in the Windows file system. With the addition of the new location, this isn’t enough and can lead you to scratching your head about why your newly updated custom.css file doesn’t seem to be working. If you drag the file into Site Designer, on the other hand, all the locations are updated correctly. More information about the changes to WebrCommon in Extranet 5.6 can be found in this article: HOWTO: Manage the Webrcommon Directory in Extranet 5.6

Even in Extranet 5.5.3, there are benefits to dragging and dropping in Site Designer. Using this approach instead of direct file system access will avoid issues related to WebrCommon files that have their file attributes or permissions set in such a way as to break backup/restore or use within your site. Dropping the files into WebrCommon via Site Designer causes them to set up correctly.

It’s as easy as Drag-and-Drop.

Tip #2

Since you are updating the contents of your WebrCommon directory, I can safely assume that you are doing this in your development store that is integrated with Source Control (right?…please tell me I’m right!). This means that any new files need to be added to your version repository as well. In most cases, Process Studio is the tool you use to do this, but there is one exception and that is with Binary files such as images. In the case of binary files you should use the Visual Source Safe client directly to add or update them. This is because Process Studio makes the assumption that files are textual. This assumption doesn’t work too well when they aren’t and can result in images not looking right. Once in source control, however, there are no concerns about them being included in your next configuration update and being correctly applied to your test and production stores. Support for binary files will be added to Process Studio with the release of Extranet 6.0.

Cheers!

Friday, July 2, 2010

Three paths to an authenticated session, Part 4: Single Sign-On

Part 1: Introduction

Part 2: Built-In Authentication

Part 3: Delegated Authentication

Your users interact with more than one online application within your institution. To do otherwise is just plain unusual these days. Perhaps you have one application for IRB processes and another for Grants. Then there’s your information portal, ordering system, Accounting System, HR system and a whole host of other possible applications. This is the reality of today’s world. While the universal goal is to put everything a user needs within arms reach, the unfortunate truth is that the Swiss Army knife approach to life in a research Institution is difficult to achieve in a single application. Next best thing is integrating different applications.

Click Commerce Extranet certainly is a great step in that direction because it enables you to put many Research related activities under one roof, but there will always be other systems. Once we accept this, the real question is how do we make this easier on the users? One pain point is the need to continually have to identify yourself to each system by logging in. Thankfully this is a very easy problem to solve by implementing Enterprise Single Sign-On (ESSO).

Tuesday, June 22, 2010

Three paths to an authenticated session, Part 3: Delegated Authentication

If you’re just joining this topic, take a peek at:

Part 1: Introduction

Part 2: Built-In Authentication

If Built-In Authentication doesn’t strike your fancy, then you’re in luck. Click Commerce Extranet provides the flexibility for you to roll your own using an External Authentication Data Source. The two most common approaches are to use LDAP or Active Directory, though nothing prevents you from using any other source, such as an external database, your HR system, etc.

The Extranet Authentication Process

As mentioned in the last post, all access to an authenticated page must first go through a login page. This doesn’t mean, however, that the user will ever see the login page. At the heart of this page is the Login Component. On that component exists logic that is executed as the login page is being rendered to facilitate both Delegated The following steps are performed:

- Check to see if the user is externally authenticated

- If not, present the Login form and collect username and password

- Authenticate the user externally

- If unsuccessful, authenticate the user locally

- Create authenticated session

In delegated authentication, the user is presented the Extranet login screen and asked to provide their credentials. These credentials are then validated against an external data source, such as LDAP. All Extranet does, however, is call a method that is intended to be overridden to contain whatever you need to do to authenticate the user.

If you launch Entity Manager, you will be able to find an Entity Type named “ExternalConnector”. This is an abstract EType.

Customizations should subtype this to implement single-signon

and other forms of external or delegated login authentication.

The method that Extranet calls to allow you to externally validate the provided credentials is “authenticateUser(userID, pwd)”. This method needs to return either true or false. If the return value is false, the authentication fails and an intentionally generic message is displayed to the user to communicate this fact. If the method returns true, the caller retrieves the logged in user now associated with the current session; if there is one, it presumes a successful login has been accomplished, otherwise it proceeds to perform the login itself, validating the credentials as a standard Extranet user.

You can see a basic template for the method here. The ExternalConnector Entity Type also includes an excellent description of the authenticateUser method.

Once you have created your subtype, you need to inform Extranet which subtype to use. This is done by setting the value of a shared attribute named “externalConnectionType” on CustomUtils. This attribute contains a string which is

the name of the EType which subtypes ExternalConnector. By default the value is “ExternalConnector”.

To enable delegated authentication, perform the following steps:

- Locate the Entity Type named Customutils in Entity Manager

- Right click CustomUtils and ‘Edit Entities‘ change the externalConnectionType to be the name of your new subtype of ExternalConnector instead of ExternalConnector

- Save your changes

Once complete, your Delegated Authentication implementation should be able to handle the following cases:

- User in Extranet but not in external authentication source

- User in Extranet and in external authentication source

- User not in Extranet and in external authentication source

- User not in Extranet and not in external authentication source

Of course, there are a few details left out in this post such as the specifics of how you connect to your external authentication source and validate the user credentials. Those details vary by implementation. By far, the most common external source is LDAP or Active Directory. Click Professional Services has plenty of experience in implementing Delegated Authentication and can get you up and running quickly. If you’re interested in enhancing your site in this way, just let us know. We’ll be glad to help.

Cheers!

Wednesday, June 9, 2010

Three paths to an authenticated session, Part 2: Built-In Authentication

If you’re just joining this topic, take a peek at:

Part 1: Introduction

By default, Click Commerce Extranet based sites allow a user to sign in using built-in authentication. This means that the site presents its own login screen and all user account information, including user ID and password, are maintained within the site’s database. The user will be presented the login screen in one of two cases:

- When browsing a public page, the user clicks the login link in the upper right corner.

- The user attempts to access a secured page directly. For example, they click a link to a project workspace in an email notification.

In both cases, the user next sees a page with the user ID and password prompts.

Did you know that you can make your own login page? The login fields themselves are presented as part of the Login component which you can place on any page. In the starter site, there is an entire page dedicated to login, but that’s just a configuration choice. Other sites have also put the login component directly on their home page.

When an anonymous user requests access to a secured page, Extranet will redirect them to the page where they can login. The page they are sent to is configured on the secured page or one of the pages up the page hierarchy from there. One of those pages must have a login page defined for it. The settings are found on the Properties dialog for the page.

At a minimum the root page in your site needs to have a login page configured for use by all pages, but you have the option of creating additional login pages for use in different sections if you wish. Extranet will redirect the user to the configured login page whenever authentication is required and will look something like this:

With built-in authentication, the user is required to enter a valid user id and password. Extranet then uses this information to authenticate the user against the local database. If authentication fails, a message is displayed, otherwise, the user is redirected to the page they were originally trying to reach. If the original URL is the default URL for the site, or the user clicked the login link themselves, they will be directed to their default page once authenticated. In most cases this would be their personal page.

At the time the login page is rendered, there are a couple of additional checks that are made to determine which authentication approach is to be used. As built-in authentication is the default, you don’t have to do any additional configuration to allow your users to login (other than to make sure they have accounts, of course).

Use of Built-In Authentication also makes it easy to use several other built-in features that you can configure via Login Policy administration or the Login Component including:

- Remember Me

- Password Policies

- Forgot User ID and Forgot Password feature

- Account Lockout Policies

- Terms and Conditions

- Legal Notification

In the next post, I’ll explain what is required to enable delegated authentication.

Cheers!

Sunday, June 6, 2010

Three paths to an authenticated session, Part 1:Introduction

So, given that, how does a user uniquely identify themselves? Click Commerce directly supports three paths:

- Built-In Authentication

- Delegated Authentication

- Enterprise Single-Sign-On

Built-In Authentication

Click Commerce Extranet includes integrated authentication which involve the user being presented with a login form where they provide their User ID and Password. These credentials are then verified against the user information stored locally within the site’s database. To use this all you have to do is define your users in the site and the rest is already in place.Delegated Authentication

As an enterprise application, Extranet also supports the mode where the authentication is performed by validating credentials against an external source. Common examples of this are LDAP and Active Directory but need not be limited to those. The real difference with this approach is that passwords are not maintained in Click Commerce. Instead they are maintained as part of an external user authentication data source. The user is still presented the Extranet login screen so that the User ID and Password can be provided but this information is then delegated to an external authentication service. Once authenticated externally, the user is allowed into the site.Enterprise Single-Sign-On

Taking advantage of ESSO means that all elements of authentication are performed external to Click Commerce Extranet. While there is no standard approach that all implementations use, the most common approach involves a technique I call “Intercept, Authenticate, and Redirect”- Intercept – Any request for a secured page within the site is intercepted by an IIS Filter installed as part of ESSO. The user is then presented with a login form owned by the ESSO implementation. This is not the Extranet login screen.

- Authenticate - The user provides their credentials into the ESSO Login screen which is then authenticated against the authentication source. This source can be anything, including Active Directory, LDAP, or a facility provided by the SSO vendor. From the perspective of Extranet, it really doesn’t matter.

- Redirect - Once the user is authenticated, the ESSO product will insert a unique identifier for the user into the HTTP Request Header then redirect the user back to where they were originally trying to go in the first place. This time, Extranet will be allowed to handle the request. At this point the user has been authenticated externally but that doesn’t mean that they have already established an authenticated session within the Extranet site. This is handled in Extranet by retrieving that unique identifier from the HTTP Header and using it to look up the user in the local Extranet database. If there is a match, Extranet will create an authenticated session for the user and allow them in.

Some examples of ESSO packages include Shibboleth, Pubcookie, Cosign, Computer Associates Site Minder, and Tivoli TIM/TAM.

All three approaches have advantages and disadvantages but as a set of options, cover all of the authentication approaches we’ve seen to date. The decision to use a particular approach is often made for you by institutional policy or your central IT group.

This was just a high level introduction. In subsequent posts, I’ll be digging into how to leverage the integration points provided by Click Commerce Extranet to allow your site to easily plug into to any of these authentication approaches.

Part 2: Built-In Authentication

Cheers!

Wednesday, May 12, 2010

What’s new in Professional Services

With only one week before the annual Click Compliance Consortium meeting in Chicago, the office is buzzing. The agenda is firming up and it looks like we’ll be hovering near record attendance levels. Each CCC has it’s own flavor thanks to the fact that it’s really an event hosted, presented, and attended by members of the consortium itself – yes, that means you. Of course there’s always the expected presentation by those of us at Click Commerce, but it’s learning what all of you are doing and the free exchange of ideas that I look forward to the most.

This year we’re trying something different that I’m excited about. On the second day the agenda splits into three tracks and we all get to spend a couple of hours in an open discussion about one of three topics: IRB, Grants, or Animal Operations. I, with the help of a couple of customers, will be moderating the Animal Operations discussion and I hope those of you with interest will be able to join in on the conversation. I hoping that this will be a lively session.

Closer to home, I’ve been continuing the drive to refine our development practices. I’m not quite ready to share any results but here’s a short list of things we’re working on:

Formalization of best practices related to the development of reports using SQL Server Reporting Services.

We presented on this at C3DF and I’ll touch on it again during my CCC Reporting presentation. We’re currently experimenting with different applications of this technology so that we can determine the situations where it is a good fit and, equally important, where we would recommend other approaches.

Automated Testing

I’m especially excited about our early progress on this topic. One of the biggest challenges in any enterprise scale development project is knowing that the most recent change has no adverse effects. Did this cool new enhancement break any pre-existing capabilities? What is the performance impact of this new enhancement? We’re currently implementing automated tests for a large IRB project using three open source tools (Cucumber, Watir, and Celerity). It’s amazingly rewarding to push a button and see the browser run through an entire workflow scenario without any further assistance from me. I’ve come to really like the color green, which is what the final report shows for tests that pass. When I see red on the other hand, representing failed tests, I get a strong urge to immediately make it green. I’ll definitely be blogging more about this in the future.

Introduction of Agile development practices

One of my own personal goals is to improve the way information flows from Project Manager to developer, provide a more real time snapshot of the status of the project, and establish good development techniques early in the project before the stress level is too high to want to try something new. For all of these reasons, I’m a fan of an Agile development methodology. In my mind, the development model I prefer is to establish a routine from the beginning of the project which is based upon a repeating cycle of development that involves these elements:

- Establish 3 stores: Development, Test, and Future Production. The development store is integrated with Source Control from day 1 and implementation is broken down onto 2 week iterations. At the end of each iteration a patch is created and applied to both Test and Future Production.

Test is where all user acceptance testing takes place. Testing on the development store is limited to the developer’s themselves testing each other’s work as part of unit testing.

Future Production starts out as a pristine store, empty of all data and is given the white glove treatment. As patches are built after each iteration, they are applied to this store to keep it up to date. In this way, we have a clean store to use when going live without first having to worry about clearing gout all the data that collects through the course of development and testing. - Only update Test and Future Production via patches. This means that the same process we recommend once your site is live is enforced early in the project. In my mind this has many benefits. It means that by the time you go live, this process is second nature and it avoids the “deer in the headlights” feeling of being asked to introduce this process at the time of going live, which is a time when you are already stressed about a lot of other things.

- Test incrementally. Thinking about how to test the entire system all at once is a bit frightening. Though the process of applying patches after each development iteration we can parcel out the testing burden and at the same time get end user feedback much earlier in the development process when there is actually time to properly respond.

As a part of this effort, we’ve also been piloting the use of a new tool to help us track all the development and so far it’s being received well. At the end of our pilot, I’ll post a summary of our experiences and conclusions.

I look forward to seeing all of you at the CCC conference next week in Chicago!

Cheers!

Sunday, May 2, 2010

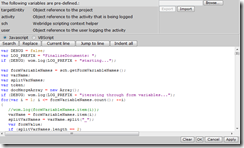

Script Editor Extensions for Extranet 5.6

Recently, I’ve been wanting to update the editor so that it was compatible with Extranet 5.6 and this weekend I took the time to do just that. I’m happy to announce version 2.0 of the Script Editor Extension.

All previous features were retained:

- Automatic Syntax Highlighting for JScript

- Automatic indentation according to JScript syntax rules

- Extensions for both the Workflow Script Editor and the Command Window

- Completely rewritten to avoid inclusion of base Extranet pages in the Script Editor Extension download package. This does imply making a minor change to the standard editor pages but it is as simple as adding a single symlink. Nothing already on the base pages is altered in any way. My hope is this will make it easier to adopt new base enhancements as they are released.

- Deployable as a standard Update via the Administration manager

- Automatically resizes the editor as the page is resized

- The color scheme used for syntax highlighting matches the standard color scheme used in Site Designer and Entity Manager.

Though this is provided as-is and is not part of the standard product, I’d love to hear your feedback. I plan to use this myself and if you encounter any issues, there’s a good chance I’ll post updates.

Cheers!

Friday, April 23, 2010

Retrieving production WOM Logs using only the browser

From the “Did you know” department comes a nice little reminder about a standard feature that is often forgotten.

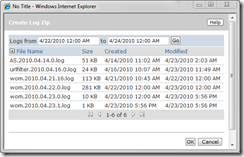

Have you ever found yourself being asked to troubleshoot a problem on production? The first question I always ask is, what’s in the WOM Log, only to find out that, though I can browse to the site, I’m not allowed to remote into the server. I wholeheartedly support the security rule preventing server level access but that doesn’t change the fact that I want to take a peek at the WOM Log. Enter WOM Log Center to save the day.

As a Site Manager on the site, you have the ability to get your hands on the WOM log files any time you want via the WOM Log Center. This feature allows you to package up all Log files last modified within a specific date range into a single zip archive and download them to your desktop for your reading pleasure. You even have the ability to download the zip as an encrypted file to hide data from prying eyes.

You can find this handy utility right on your site in the Site Options tab on the Site Administration page.

Cheers!

- Tom

Monday, April 19, 2010

Hybrid CDT’s and Cloning

Cloning in one form or another has been in existence with the starter sites for a long time. It’s used for a variety of purposes, initially to support the Amendment process then later to support things like Project templates. I’ve even seen it used as a means to generate large volumes of test data. In order for cloning to work, it must respect a certain set of rules. These rules allow the result of the clone (i.e. the copy) to adhere to all the structural and relational rules that define a project. Some examples, include:

- The copy must have a unique ID

- All Data Entry CDTs must be uniquely owned by the project

- Persons, Organizations, and Selection CDTs must not be duplicated as they are considered to be shared resources, referenceable by multiple projects (among other things).

So what does this have to do with the Hybrid CDT approach? Keep in mind that the Hybrid CDT approach uses the default behavior for Data Entry and Selection CDTs in a unique way to take advantage of the native user interface controls, thus avoiding the need to craft a custom UI. To accomplish this, we use two CDTs: A Data Entry CDT that “wraps” a Selection CDT. From a UI perspective this gives us the flexibility that we need. However, from a data persistence perspective we’re actually deviating from the standard definition of a Selection CDT. Rather than the selection CDT entity being a shared resource that can be referenced by multiple entities, we change the rules a bit and only allow the selection CDT to be referenced by the data entry CDT that wraps it. In turn, being a data entry CDT, it can only be “owned” by one project. The combination of the two really represents data that should be managed as a data entry CDT entity.

The clone method in EntityCloner, however, has no idea that we changed the rules in the case of the Hybrid CDT, so it still clones Data Entry CDT entities but does not clone Selection CDT entities. This means that, following a clone the same entity is referenced by both the original and copy. This should not be allowed. To overcome this problem, we need to enhance EntityCloner to be aware of this new special case. The good news is that the enhancement is very simple to implement. The bad news is that it requires a base enhancement. Here’s what you need to do…

Via Entity Manager, edit the EType named EntityCloner. On that type, there is a method named isClonable. This method is called by the clone process to determine if an entity should be cloned. It returns a Boolean where true means clone and false means don’t. In that method, you’ll find the logic that makes this determination. The part we’re interested in looks like this:

ifYou will want to enhance it by adding the highlighted code as shown here

(

entitytype == Document ||

entitytype == WebPage ||

(entitytype.inheritsFrom(CustomDataType) && entitytype._usage == "dataEntry") ||

("isClonable") && entitytype.isClonable()) ||

entitytype.inheritsFrom(CustomAttributesManager) ||

entitytype == HistoricalDocument ||

entitytype == ResourceHistory

)

{

clonable = true;

}

ifThis will cause the method to determine if the Selection cDT entity is to be cloned by asking the entities type rather than just blindly saying “don’t clone”. The next step is to add the isClonable() method as a per-type method to each of the Selection CDTs that exists as one half of a Hybrid CDT. The method you will add looks like this:

(

entitytype == Document ||

entitytype == WebPage ||

(entitytype.inheritsFrom(CustomDataType) && entitytype._usage == "dataEntry") ||

(entitytype.inheritsFrom(CustomDataType) && entitytype._usage == "selection") &&

(entitytype.hasTypeMethodNamed("isClonable") && entitytype.isClonable()) ||

entitytype.inheritsFrom(CustomAttributesManager) ||

entitytype == HistoricalDocument ||

entitytype == ResourceHistory

)

{

clonable = true;

}

function isClonable()As EntityCloner is a base Extranet type, you will need to track this change in case you need to reapply it after a base Extranet upgrade but, once implemented, you can safely clone projects which leverage the Hybrid CDT technique.

{

try {

// this selection CDT is used as part of a Hybrid CDT pair so entities of this type need to be cloned.

return true;

}

catch (e) {

wom.log("EXCEPTION _YourSelectionCDT.isClonable: " + e.description);

throw(e);

}

}

Cheers!

Monday, March 15, 2010

Upgrades, New Sites, and New Projects – Oh My!

Since we last “spoke” those of us in Click Professional Services have participated in the successful upgrade of existing customer sites to Extranet 5.6, helped usher in brand new compliance sites, and kicked off several new projects. It’s only fair to mention that none of this would be possible without your significant efforts. Don’t be shy, you know who you are.

Let’s take the process of upgrading to Extranet 5.6, for example. While we can help with all the technical bits, and guide your effort an upgrade will never go smoothly without the diligence of your technical and business staff to regression test your site to verify that there are no upgrade surprises. From a calendar time perspective, this is the biggest part of any upgrade. This past weekend was the culmination of a well executed upgrade project for a IRB and CTRC site. Though I stayed within reach of my computer all weekend just in case I was needed, I only received a couple of calls and the upgrade completed without any serious complications. This is a testament to the work done by the site owner to properly test and prepare for the actual upgrade and proves that a well executed upgrade doesn’t need to be characterized by a plethora of crossed fingers. I’m not saying it can be completely stress free as there are always those little worries and time pressures but those who are well prepared can keep those worries from defining the effort. Congratulations on a well executed upgrade!

Also this past weekend, I supported the roll out of a brand new IACUC and IBC site. New site deployments are often tricky as the communication plan which includes all the pre-launch training, the “new site is on it’s way” announcements and all the promises up the management chain really increase the stress level. First impressions of a site are so critical to it’s near term success that not meeting the commitments made about the when’s and what’s negatively contribute to those first impressions. On the flip side, rolling out the site as promised , on time and with the advertised features is the first step in establishing the long term success of the site. No pressure, right? The number of variables involved in rolling out a site also contributes to the stress level. Did the legacy data load work? Were any of the steps missed? Can the users properly log in? I’m so tired, why didn’t I eat a good breakfast this morning? Did I forget to turn off the stove? OK – those last two may seem unrelated but once we start down the path of worrying about all that can go wrong, the lines seem to blur a bit – or is that just me?

Just like an upgrade, most sources of angst can be minimized through proper preparation, practice, and testing. This means that time is allocated in the schedule for a “let the dust settle” period between development/test and deployment. This period is used to do all the little things that are often forgotten such as triple check the things that caused you to loose sleep and to make sure you turned off the stove. Hopefully this will help you gain a small amount of calm confidence.

Even if you aren’t able to achieve the desired level of calm, don’t worry. Realize that your users are new to this site as well and you know more than they do about how to use it. Expect there to be new issues discovered after going live and expect there to be a series of configuration updates to be applied to incrementally correct and tune the site. The feedback you will get over the first few weeks (and beyond) is a great source of information and informs your release planning process. Constructive feedback is not only a sign that user’s are using the site but that they are also invested in it’s success. If you haven’t already put your SDLC into action, now is the time because you have actual users, live data, and a steady stream of future enhancements to push out the door. Accept the fact that a site is never done but by all means celebrate your achievement of going live! Now where’s the party?

Cheers!

Ideas that worked and those that haven’t (yet)

Over the past year, I’ve released a few development techniques into the wild, not knowing if they would return to bite me or flourish. I’m happy to report that I’ve yet to be bitten. Here are the techniques that are starting to flourish:

- Hybrid CDT – I’m aware of this technique being deployed in at least 5 customer sites so far with great success.

- ProjectValidationRule – To my knowledge this is being used in 3 customer sites so far and I’ve already been getting enhancement requests which is a good sign.

- Using JQuery and Ajax in your site – To be honest, this technique shows a pretty basic pattern so knowing if my post spurred you to action or you came up with it on your own is difficult to say but I’m personally aware of 6 sites which use this or a variation and I’ve see a couple of very impressive uses of Ajax to implement things like inline form expansion to facilitate the entry of CDT data instead of using the standard popup. I have no doubt we’ll be seeing more examples of this approach over the coming months, including in the base Extranet product. I’m looking forward to the Ajax-driven choosers in Extranet 5.7, for example.

I also blogged about a couple of experiments I was trying which, unfortunately have yet to work out. They are:

- Modules and Solution support in Process Studio – For those of you unfamiliar with this feature, it was released as a “technology preview” in Extranet 5.6 (which basically means it hasn’t been verified to work in all cases). The goal of this feature is to allow you to be able to categorize your workflow elements into different modules and to define Solutions which are comprised of those modules. When you build a configuration update, you can select which solutions to include. It’s an exciting idea because, when it works, it will allow you to manage separate release schedules for each of your solutions that coexist in a single site. I gave it a try on a recent project and was able to identify a few issues which makes it unusable in its current version. This effort was useful though because we were able to both identify the issues and, through practice, suggest to the Engineering team the short list of fixes that would be required before it can be tried again. I’m still very excited about this feature and will definitely give it a go again when the few critical issues have been addressed.

- Object Model documentation approaches – I’ve blogged about various data modeling techniques as well as the importance of establishing your data model as early in the project as possible. One of the challenges in getting a model implemented is how to review the object model design before making the investment to actually implementing. I explored various tools using a few key evaluation objectives and eventually settle on two open source tools: StarUML and Doxygen. I was very pleased with the results when used as an initial design tool but less pleased with the fact that it wasn’t integrated into the overall implementation process. What was required was round-trip-engineering support. Once designed, it would be great to then auto-create or update the Project Types, Custom Data Types and Activity types in the development store. It would be equally nice to also publish the implemented model back into StarUML. I can see how both could be accomplished but failed to find the time to make it happen. I still use this technique for initial model design and review but it becomes too costly to maintain once development is underway. One day, I hope to find the time to add round-trip support. In the meantime, the Applications team is doing some promising work to be able to generate eType reference pages in real time. I like this approach because those reference pages are always accurate. The downside is that the model needs to actually be implemented before those pages will work and reviewing a design after implementing seems a bit counterintuitive. The value in that technique dramatically increases after the model has been reviewed and implemented for real.

There are a couple of other techniques which I hope will be useful but have not heard about since released into the wild:

- Extranet to Extranet Single Sign-On. I’d love to hear if you have put this technique into practice.

- Script Editor Update – One of me earliest posts, It’s been fun to stumble upon customer sites where this has been put into practice. It wasn’t perfect by any means and I still have the list of improvements some of you have suggested and hope to implement them some day. I verify that it works on Extranet 5.6 and if it doesn’t publish a new version. If you already know if it does, please let me know.

So, no real failures but a couple of experiments that need more time to mature and a couple that haven’t flourished…yet. The efforts that flourished are enough for me to want to keep throwing ideas your way. If you find them useful (or even if you don’t), let me know. I’d love the feedback.

Cheers!

Tuesday, January 26, 2010

Kicking off the new year in high gear

We're nearly one month into 2010, and I'm excited about all that's happening around the office. Here are a few highlights:

- CCC membership is growing fast and that means that all of us in Professional Services are keeping very busy and we continue to grow our staff to meet the ever increasing demand. This is good stuff and I welcome all the new CCC members.

- We have our first Grants customer live on Click Commerce Extranet 5.6 and within days of going live they electronically submitted their first grants application to Grants.gov using Click Commerce SF424 1.7. Milestones like this are always rewarding.

- We just completed delivery of our Introduction to Process Automation course last week.

- C3DF preparations are in high gear and I hope to see many of you here next month.

- Our upcoming Advanced Workflow Configuration course, scheduled for the Monday immediately following C3DF is already full and more have signed up for the next offering. This is personally exciting to me because one of the things I enjoy most is sharing what can be accomplished on the Extranet platform.

- We've kicked off a project with the nation's largest IRB (I'll let you wonder who this may be since the official press release isn't out yet)

- We're kicking off several other projects as well including the NASA IRB project, though we're disappointed that the meeting won't include a trip to the International Space Station. I can only imagine the battle to be a member of that project team if it did!

- We've kicked off our first Grants project that will leverage a completely new approach to budget grid implementation. This new Grid approach is very exciting and will rapidly spread out beyond Grants and surface in other solutions such as Clinical Trials, Participant Tracking and Animal Operations.

- We've begun to work through best practices for defining and implementing a new generation of reports. I'll definitely be talking more about this as we roll out our Enterprise Reporting Services.

It's been a frenzied start to the new year and I've been unable to return to this blog since the relative quiet of the holidays. Realizing that things aren't going to slow down any time soon, I either accept the fact that I go dark on this blog or somehow find the time to keep things relatively current. It's not for a lack of topics, I rarely find myself without something to say. Whether or not it's something you actually want to hear is up to you and I certainly hope to hear from you either way. Not being one to accept defeat so easily, I sit here at my laptop late at night night, writing down whatever comes to mind. Hopefully it will make sense to at least some of us. ;-)

In several of my previous posts, I promised I would revisit topics to let you know how things are going and I plan to do just that in my next post. Beyond that, if you have any thoughts on what you want to see in future posts please let me know. I'd love to hear about them. Keep those cards and letters coming!

Cheers!